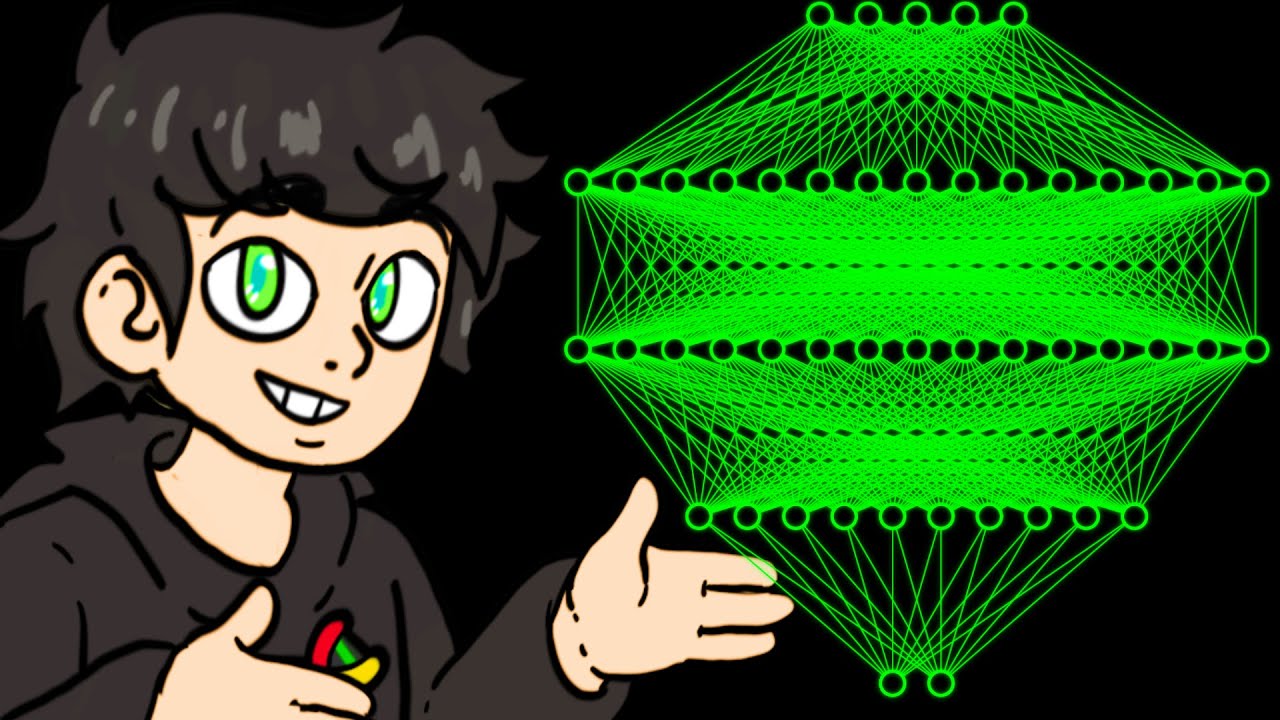

Why Neural Networks can learn (almost) anything

A video about neural networks, how they work, and why they're useful.

My twitter: https://twitter.com/max_romana

SOURCES

Neural network playground: https://playground.tensorflow.org/

Universal Function Approximation:

Proof: https://cognitivemedium.com/ma....gic_paper/assets/Hor

Covering ReLUs: https://proceedings.neurips.cc..../paper/2017/hash/32c

Covering discontinuous functions: https://arxiv.org/pdf/2012.03016.pdf

Turing Completeness:

Networks of infinite size are turing complete: Neural Computability I & II (behind a paywall unfourtunately, but is cited in following paper)

RNNs are turing complete: https://binds.cs.umass.edu/pap....ers/1992_Siegelmann_

Transformers are turing complete: https://arxiv.org/abs/2103.05247

More on backpropagation:

https://www.youtube.com/watch?v=Ilg3gGewQ5U

More on the mandelbrot set:

https://www.youtube.com/watch?v=NGMRB4O922I

Additional Sources:

Neat explanation of universal function approximation proof: https://www.youtube.com/watch?v=Ijqkc7OLenI

Where I got the hard coded parameters: https://towardsdatascience.com..../can-neural-networks

Reviewers:

Andrew Carr https://twitter.com/andrew_n_carr

Connor Christopherson

TIMESTAMPS

(0:00) Intro

(0:27) Functions

(2:31) Neurons

(4:25) Activation Functions

(6:36) NNs can learn anything

(8:31) NNs can't learn anything

(9:35) ...but they can learn a lot

MUSIC

https://www.youtube.com/watch?v=SmkUY_B9fGg

Generative AI

Generative AI

Data Analytics

Data Analytics