GPT in PyTorch

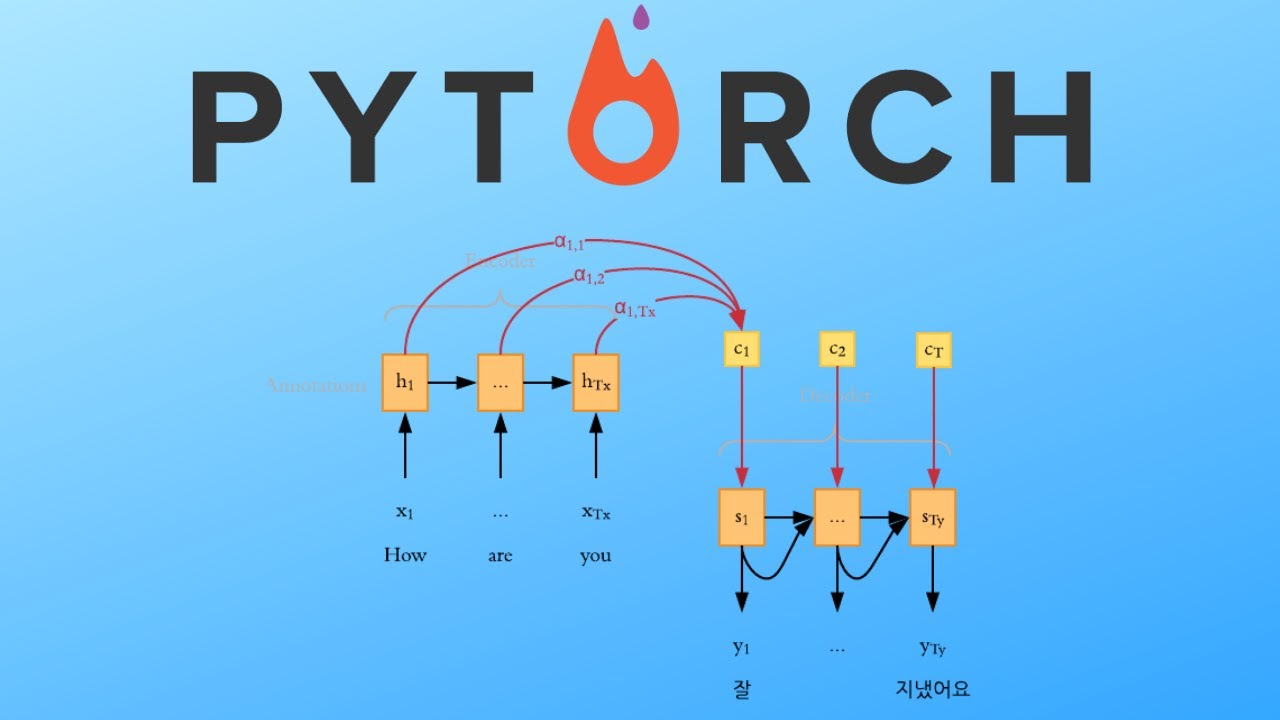

In this video, we are going to implement the GPT2 model from scratch. We are only going to focus on the inference and not on the training logic. We will cover concepts like self attention, decoder blocks and generating new tokens.

Paper: https://openai.com/blog/better-language-models/

Code minGPT: https://github.com/karpathy/minGPT

Code transformers: https://github.com/huggingface..../transformers/blob/0

Code from the video: https://github.com/jankrepl/mi....ldlyoverfitted/tree/

00:00 Intro

01:32 Overview: Main goal [slides]

02:06 Overview: Forward pass [slides]

03:39 Overview: GPT module (part 1) [slides]

04:28 Overview: GPT module (part 2) [slides]

05:25 Overview: Decoder block [slides]

06:10 Overview: Masked self attention [slides]

07:52 Decoder module [code]

13:40 GPT module [code]

18:19 Copying a tensor [code]

19:26 Copying a Decoder module [code]

21:04 Copying a GPT module [code]

22:13 Checking if copying works [code]

26:01 Generating token strategies [demo]

29:10 Generating a token function [code]

32:34 Script (copying + generating) [code]

35:59 Results: Running the script [demo]

40:50 Outro

If you have any video suggestions or you just wanna chat feel free to join the discord server: https://discord.gg/a8Va9tZsG5

Twitter: https://twitter.com/moverfitted

Credits logo animation

Title: Conjungation · Author: Uncle Milk · Source: https://soundcloud.com/unclemilk · License: https://creativecommons.org/licenses/... · Download (9MB): https://auboutdufil.com/?id=600

Generative AI

Generative AI

Data Analytics

Data Analytics